Why we don’t use a CDN: A story about SPDY and SSL

By on February 5, 2014

Last week we moved to a new SSL everywhere setup for this website. We were really excited to implement SSL across the board, but nervous about the impact on site performance. Therefore, we made it priority to focus on performance during the transition. Using a CDN (content delivery network) for the new site was a forgone conclusion, as we assumed it would help us speed things up. But, after testing with a few different CDNs, we uncovered some surprising results.

Before the switch

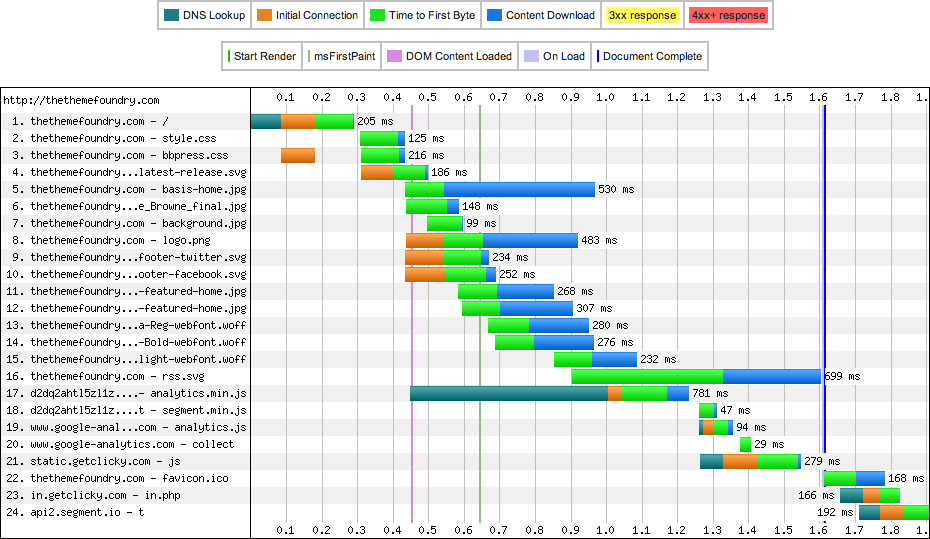

Before we talk about where the site stands in terms of performance, let’s take a look at how well the site did prior to this switch.

There are a few things that are important to notice about this figure. First, the time to first byte (TTFB) is 205ms. This TTFB value is the DNS lookup time (84ms; in blue-green) + the initial TCP handshake (95ms; in orange) + the time it takes to receive the first HTML header response (26ms; in bright green). A TTFB time of 205ms is quite respectable and means that we are serving WordPress really quickly and our network connection is sufficient. Second, the whole site loads in 1600ms (1.6s; the blue line). We have some additional scripts that fire afterward, but those do not effect the painting of the page. Third, notice the diagonal trend of the waterfall. It’s a nice visualization of assets loading in serial. Modern browsers can make a number of connections at the same time to a single URL, but each of these require a separate TCP connection. This leads to the diagonal waterfall. Finally, notice the 6 unique patches of orange through item 16. These orange strips represent the time that it takes for the browser to connect to the server. As you can see, this happens quite a bit.

The old site will be our baseline for measuring performance on the new site. Unfortunately, we cannot make 1:1 comparisons between the sites because the two servers are hosted in different locations (New Jersey vs. Texas). In hindsight, I really wish we had these two servers in the same physical location as it would lead to much grander conclusions. Even so, I think the results I am about to present are still quite interesting.

Why SSL has performance issues

SSL has inherent performance issues. At the most basic level, SSL requires additional round-trip transfers between the server and the browser. To give you an example of the expected performance impact, in the figure above, the TCP handshake took 95ms. This is essentially the round-trip time (RTT) between the test location (Los Angeles) and the server (New Jersey). SSL negotiations require two extra round-trips between the browser and the server. As such, if we simply placed a well configured SSL implementation on our old servers, we would expect 95ms x 2 (190ms) for the SSL negotiation, thereby increasing the TTFB by 190ms. Additionally, if your server isn’t configured correctly for it, you will likely incur a lot of additional overhead as well. Ilya Grigorik documents how you are likely incurring 5 extra round-trips if you are using vanilla Nginx. Clearly, when adding SSL into the mix, careful consideration of the performance impact is important.

Getting Nginx ready for SSL

Heeding Ilya’s warnings, we jumped into manually compiling Nginx with the mainline version of the package. We used Nginx 1.5.9 (to avoid the “large certificate” bug), compiled against OpenSSL 1.0.1e (to enable NPN), and enabled Perfect Forward Secrecy (which adds even more security) all in an effort to incur no more than 2 extra round-trips in our effort to bring SSL to our site. We added the following directives to our server block for the site:

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_prefer_server_ciphers on;

ssl_ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS;

ssl_buffer_size 8k;The first line declares the TLS versions that we support. This allows the site to support a wide array of secure connections with browsers. The only browser that is not supported is IE6 and that was acceptable for us. IE6 only support SSL 3.0 or lower, which is susceptible to a renegotiation vulnerability. The next line allows us to choose the preferred ciphers used for the TLS negotiation. It allows us to prefer more secure ciphers over less secure ones. We decided to implement cipher suite recommendations from Hynek Schlawack, which are validated by Qualys SSL Labs. Finally, we reduced the size of the SSL buffer (the default is 16kb) in order to avoid a buffer overflow scenario that would cause additional round-trips to be needed. In the end, this implementation registered as an A on SSL Labs, an excellent tool for examining your SSL implementation.

With this server overhaul, we also made sure to implement SPDY with Nginx. SPDY comes standard in Nginx as of 1.3.15. Earlier versions of Nginx required manual compiling to support SPDY. SPDY has become the starting point for HTTP 2.0. SPDY is a transport layer that sits on top of SSL and provides HTTP multiplexing, prioritization, and server push. Our hope was that if we suffered any performance degradation due to SSL, we could make up the time from SPDY’s reported performance improvements. Fortunately, right before we launched, the SPDY 3.1 patch, sponsored by Automattic and MaxCDN, was released and we could roll into our Nginx implementation.

If you are interested in getting a similar Nginx setup, I recommend taking a look at our compile instructions for Nginx. These instructions also compile Nginx with PageSpeed and Nginx Cache Purge support.

A CDN for more speed

We have been contemplating implementing a CDN on the site for a while. We decided to make it happen with this update. Again, we thought that adding a speed improvement via a CDN could help mitigate the SSL performance issues. We had some really interesting experiences exploring a CDN provider, which we’ll write about later. Initially, we chose MaxCDN, which was really quick to set up and implement. We used Mark Jaquith’s CDN drop-in with a few customizations for https compatibility.

Performance with the CDN

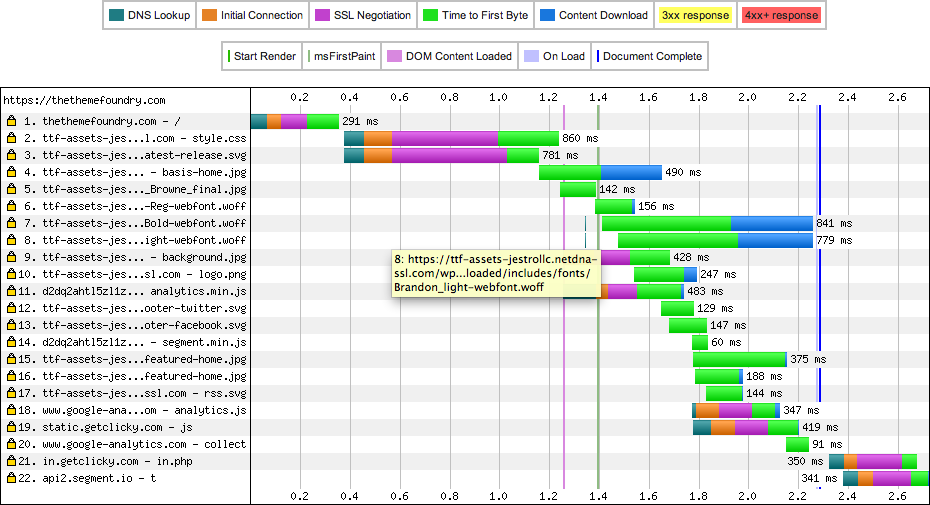

With everything in place, I eagerly went to test the site.

Just in case you forgot, this server is not located in the same location as our original server, so we have to be careful when comparing numbers between the tests; however, we can still draw some interesting conclusions.

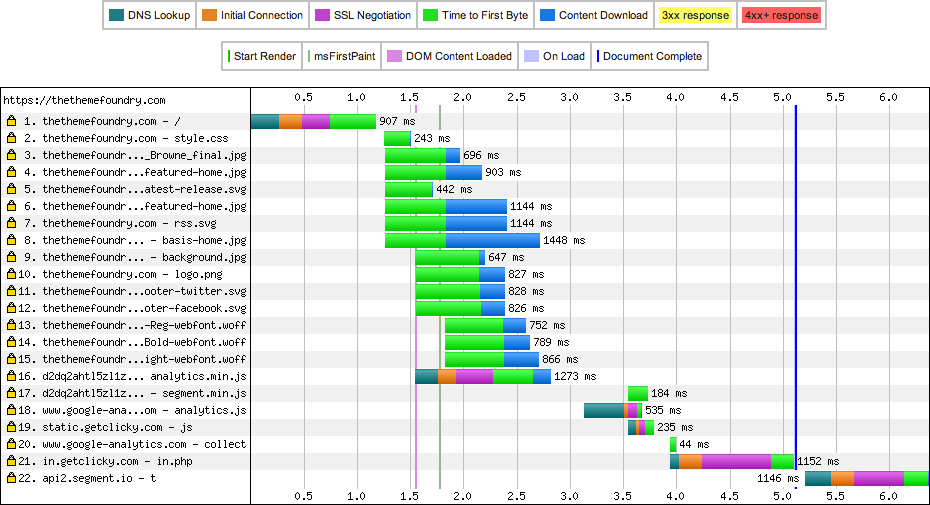

The first thing to notice here is that there is indeed an extra color in our race to TTFB. The purple band in item #1 represents the SSL negotiation. We expected that this would be here. The initial connection, which represents our round-trip time, weighed in at 57ms and the SSL negotiation took 103ms. This suggests that we successfully avoided the extra round-trips that Ilya warned about and were somewhere in the range of 1-2 extra round-trips, which is quite acceptable. If all we did was implement SSL, we would have lost only 1-2 round-trips worth of performance, which is the expected result. This result alone made me think that we accomplished our performance goals

Unfortunately, there are 21 other lines to inspect in this figure and they did not look good. On the second line, SSL negotiation time is bothersome. This line represents the download of our site’s CSS file from MaxCDN’s servers. This request involves a DNS lookup (76ms; blue-green), a TCP connection (110ms; orange), an SSL negotiation (429ms; purple) and the content download (243ms; bright green). Notice that the SSL negotiation was roughly 4 times the length of the initial TCP connection. Clearly, the SSL negotiation is not well tuned. We spent a lot of time tuning our SSL connection for speed and now we are suffering from slow SSL negotiation on the CDN. This was not the result I was hoping for when we implemented the CDN.

Another thing to note about the CDN performance (items 2-13, 15-16) is that this negotiation is repeated 3 times. Additionally, we incur extra DNS lookups, which slows our asset loads. Fortunately, the last time, I believe that the SSL negotiation uses SSL resumption and it is much quicker. Finally, notice the diagonal waterfall of the elements. We are seeing the same serial download pattern that we saw in our pre-launch site tests.

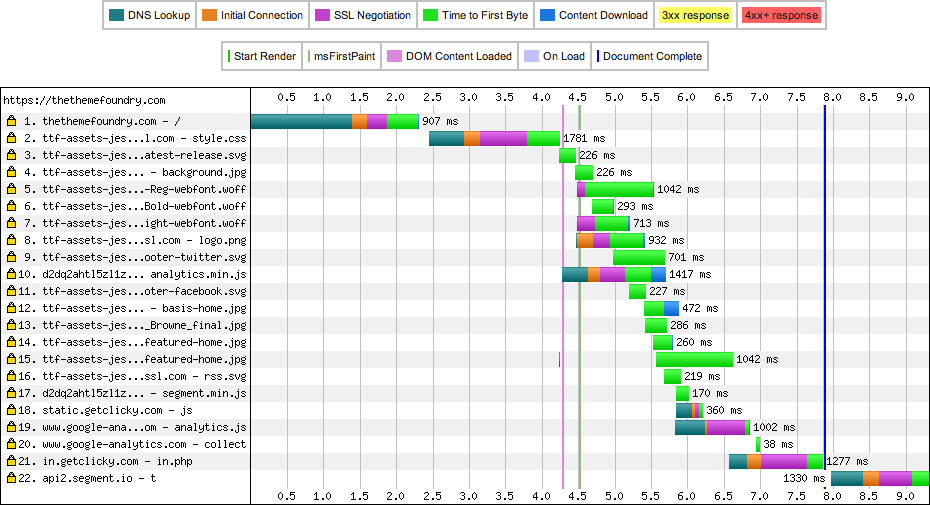

With CDN farther from the server

One of the primary benefits of a CDN is placing assets physically closer to site visitors, which reduces round-trip time. As we are beginning to see, round-trip time is an important consideration. If you cannot reduce the number of round-trips, perhaps you can reduce the time they take. A CDN can help with this. As such, I decided to perform a test from Australia to see how our site performed, primarily because our Designer, Scott, lives down there and I wanted to see how things worked out for him.

Again, we are seeing great SSL negotiation times with our site. The round-trip time is in the 200ms range, which is similar to the round-trip time to our servers. What I was hoping for is that even though the initial time to connect to our site was poor, the CDN would help pick up some of the slack and serve the assets really quickly. This did not appear to happen in this case and was hindered by the slow SSL negotiation and serial connections.

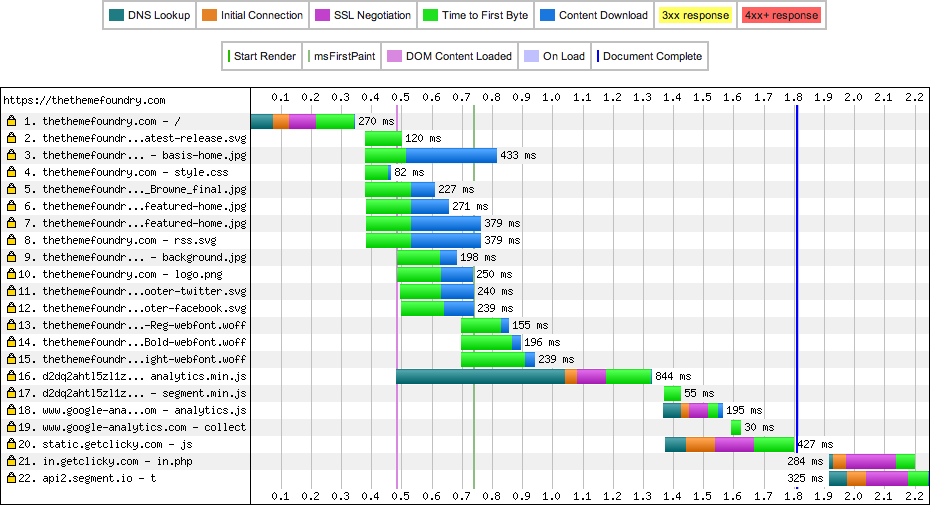

Without the CDN

With the disappointing CDN results, we decided to take a look at how things performed without it.

These results blew my mind. We are seeing the great SSL negotiation times. Then, for items 2-15 (our assets), there is no additional SSL negotiation or connection times. SPDY’s HTTP multiplexing is allowing all of these request to occur over the same TCP connection. We do not need to make additional connections or SSL negotiations. All of the items on the page are loaded in just over 900ms, which is outstanding (yes, that’s faster than the old site, but the server is also closer).

Also notice, that there is less diagonal flow to this waterfall. This shape is due to how SPDY allows multiple HTTP requests over the same TCP connection. It is important to note that while using the CDN, we received no benefit from SPDY. By removing the CDN, we start to see the benefit of reusing a TCP connection and SSL negotiation.

Clearly, SPDY is providing a huge benefit for us here. While we lose the 70-90ms in SSL negotiation, we save so much time in not creating more connections due to HTTP multiplexing.

Without a CDN in Australia

The previous results were awesome and beyond our wildest dreams. Now, we needed to make a decision about the CDN. Yes, the CDN performance was not great, but perhaps it does provide a measurable improvement for Scott down in Australia.

I honestly did not expect this result. I thought the CDN would be much better than SPDY from a single location. All of our assets loaded via the CDN in just under 5 seconds. It only took ~2.7s to get those same assets to our friends down under with SPDY. The performance with no CDN blew the CDN performance out of the water. It is just no comparison. In our case, it really seems that the advantages of SPDY greatly outweigh that of a CDN when it comes to speed.

It should be noted that we did try other CDNs. Unfortunately, we found similar results. We found CDNs with better SSL negotiation times and got the total asset load time down to 4.5s from Australia, but the multiplexing just worked better for us than placing assets in a closer physical location.

Caveats

In writing this article, I wanted to highlight the changes to our site with the new infrastructure that we implemented, while not overstating any of the conclusions. There is nothing scientific about these tests. In particular:

- Our pre and post server locations were different. Not all tests were on the same hardware, though it was comparable.

- All tests were conducted in Chrome, which is the most performance advance browser available. Roughly 50% of our visitors use Chrome, so it certainly makes the decision easier for us.

- We did not look at a number of locations around the world. We focus on the US and Australia.

- We only looked at the home page, which is quite important; however, other pages serve more images and assets.

Last thoughts

While the conclusion for us was to remove the CDN, it is important to note that this decision may or may not be the right one for you. For our site, we saw a huge benefit of HTTP multiplexing. Assets were delivered really fast, we avoided extraneous connections, and we could benefit from our speedy SSL negotiations. Speed is only one benefit of a CDN. In our case, speed was the only thing that we wanted from it and we did not get it.

In hindsight, it was almost silly for us to implement SPDY and a CDN. By sharding assets to another domain, we really lose all of the benefits of SPDY. It definitely was not clear to me how SPDY would benefit us, but after take a closer look, SPDY paid off in a major way. It was hard to find a CDN provider that used SPDY. It will pretty amazing when you can combine SPDY and CDN benefits, which is theoretically possible with a CDN provider that provides SPDY and allows SSL on CNAMEs.

As a developer or sysadmin, it is important to measure things that can be measured and make decisions based on these measurements. If we would not have tested the CDN performance, we would still be using it thinking that we were seeing a performance boost, not to mention, paying for a service that was not giving us the intended benefit. We are excited that this investment in Nginx and SPDY has paid off for us!

Enjoy this post? Read more like it in From the workshop.